Architects design according to the needs of their clients. Therefore, during the design process they constantly communicate with their clients and try to make them participate in the design process in order to gather feedback, so that the needs can be fulfilled. During this participation, the information mostly delivered by computer medium such as; Cad drawings, 3d-models and rendered images. Architects explain how their design proposal can satisfy their clients’ needs and solve their problems. This level of participation is that clients listen to what is being planned for them. However, today with the help of technology, the level of participation can be empowered by providing clients the ability to take action in design process.

But how? Architects use their educational and technical background to design. This causes for their clients to have some challenges in the process. Therefore, this projects aims to provide a platform that balances design ability of clients with architects' and at the same time a platform that integrates with architectural design process.

20 weeks

Master Thesis

2015

Pen and Paper, Unity3d, C#, Apple Developer, Xcode, 3ds MAX, Adobe After Effects, Sketch, Boujou VFX

My solution aims to create the balance by providing clients Augmented / Mixed Reality and Embodied interaction. These will be provided by a smartphone application. Because the features require being active and connected with physical world.

In the meantime, architects will be provided by a desktop platform that they can use at their office without getting distracted. This platform will reflect the client contribution and help architect to mark where (in which point of the project) he needs feedback.

In the end, the solution will be a cross-platform concept in which smartphone application and desktop platform are connected and dependent on each other.

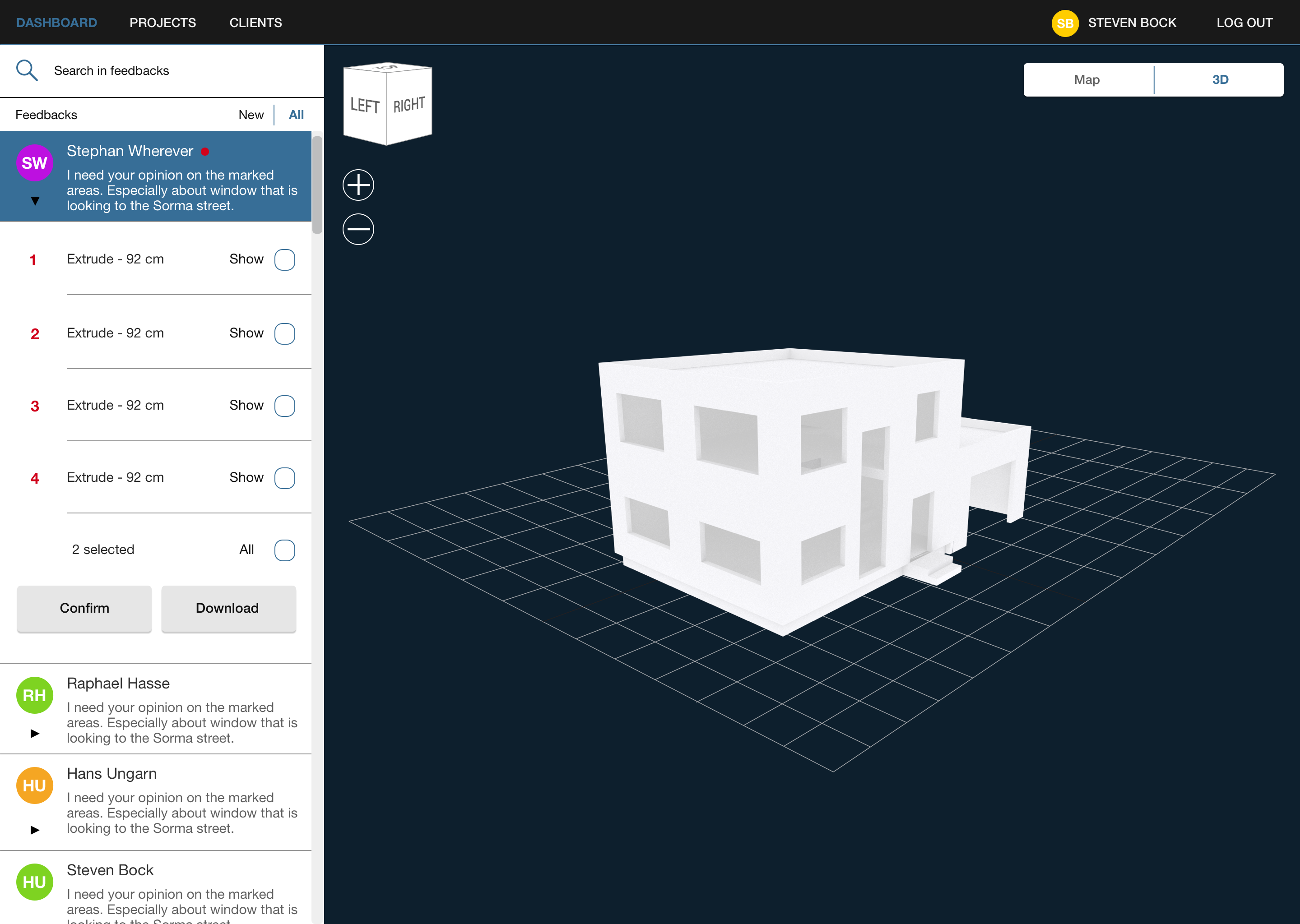

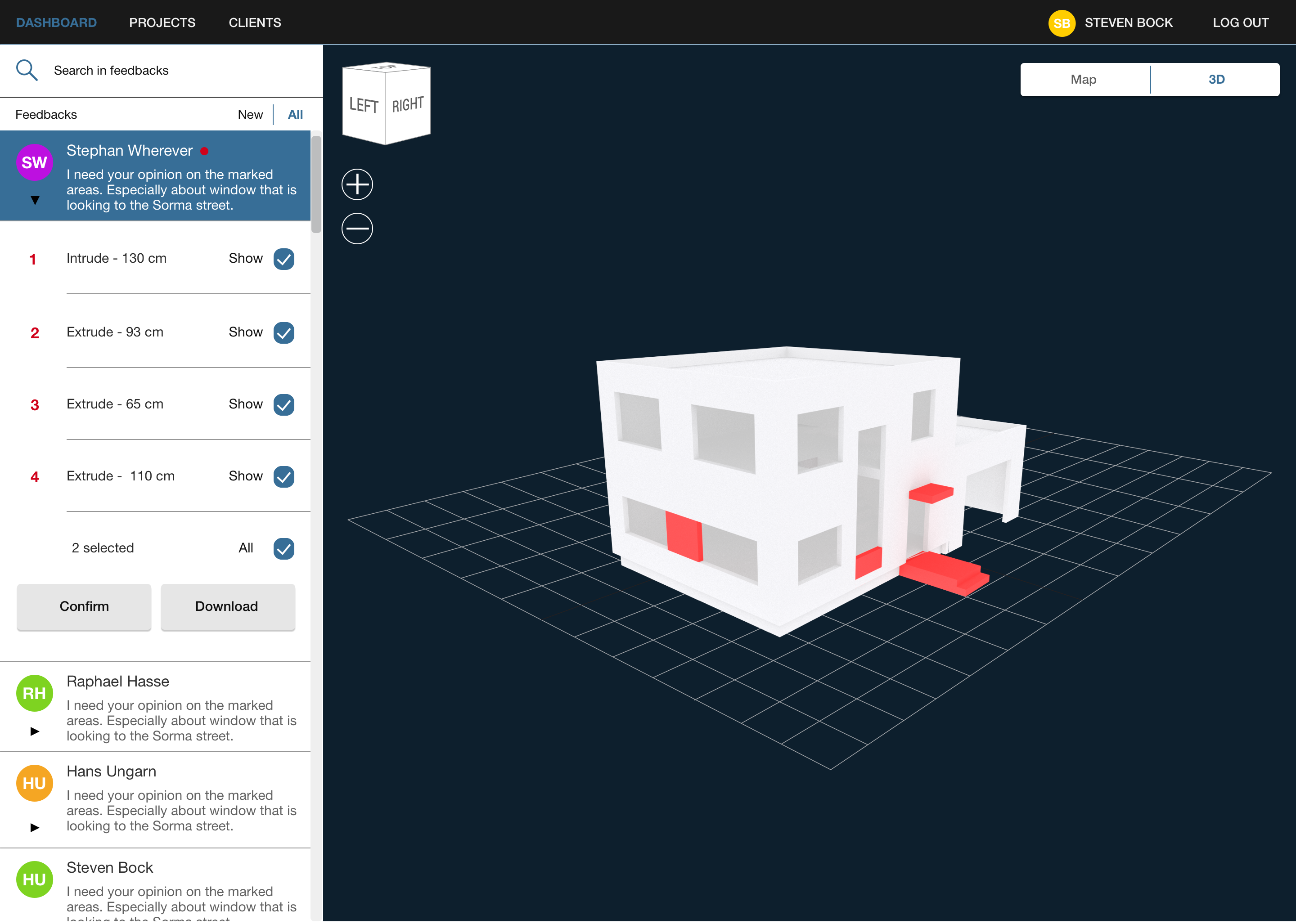

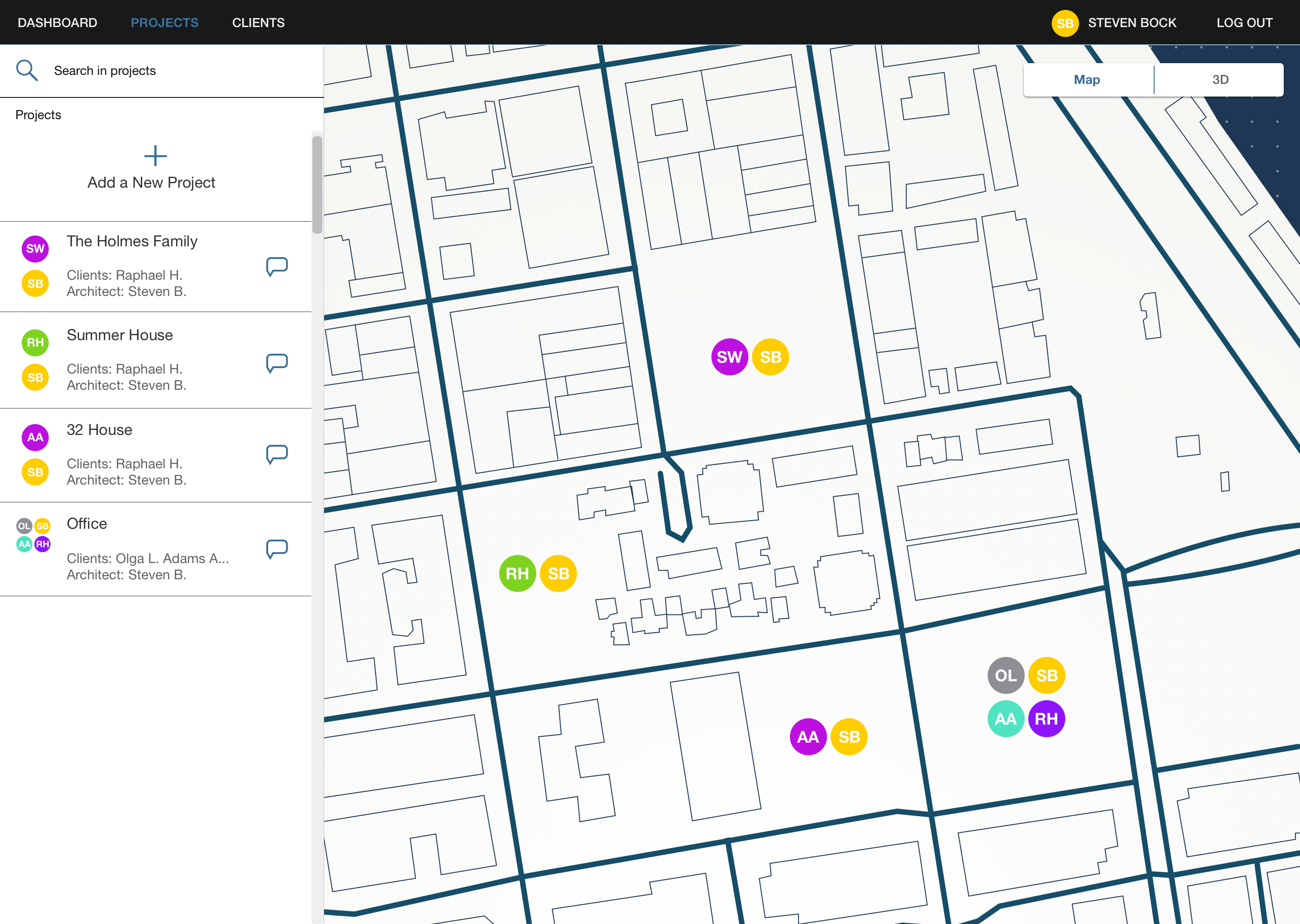

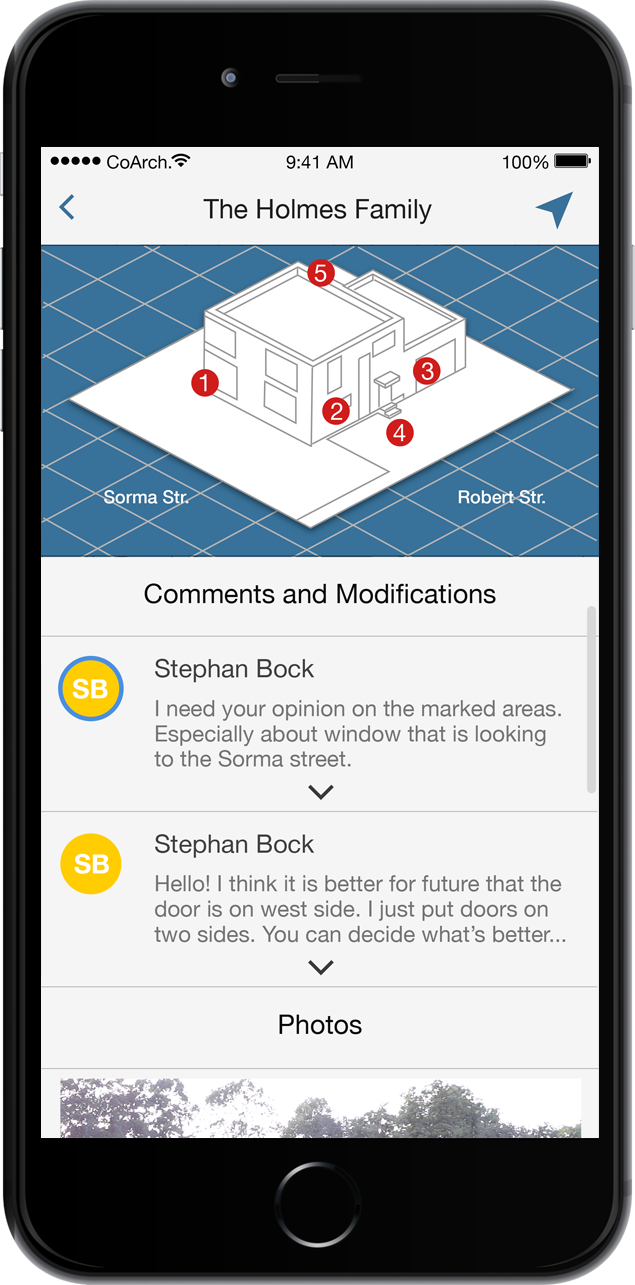

The home page of desktop platform shows the feedbacks that come from clients and named as Dashboard. By selecting the feedback user sees the project on the map or seeing what kind of changes made by the client by switching to 3D mode.

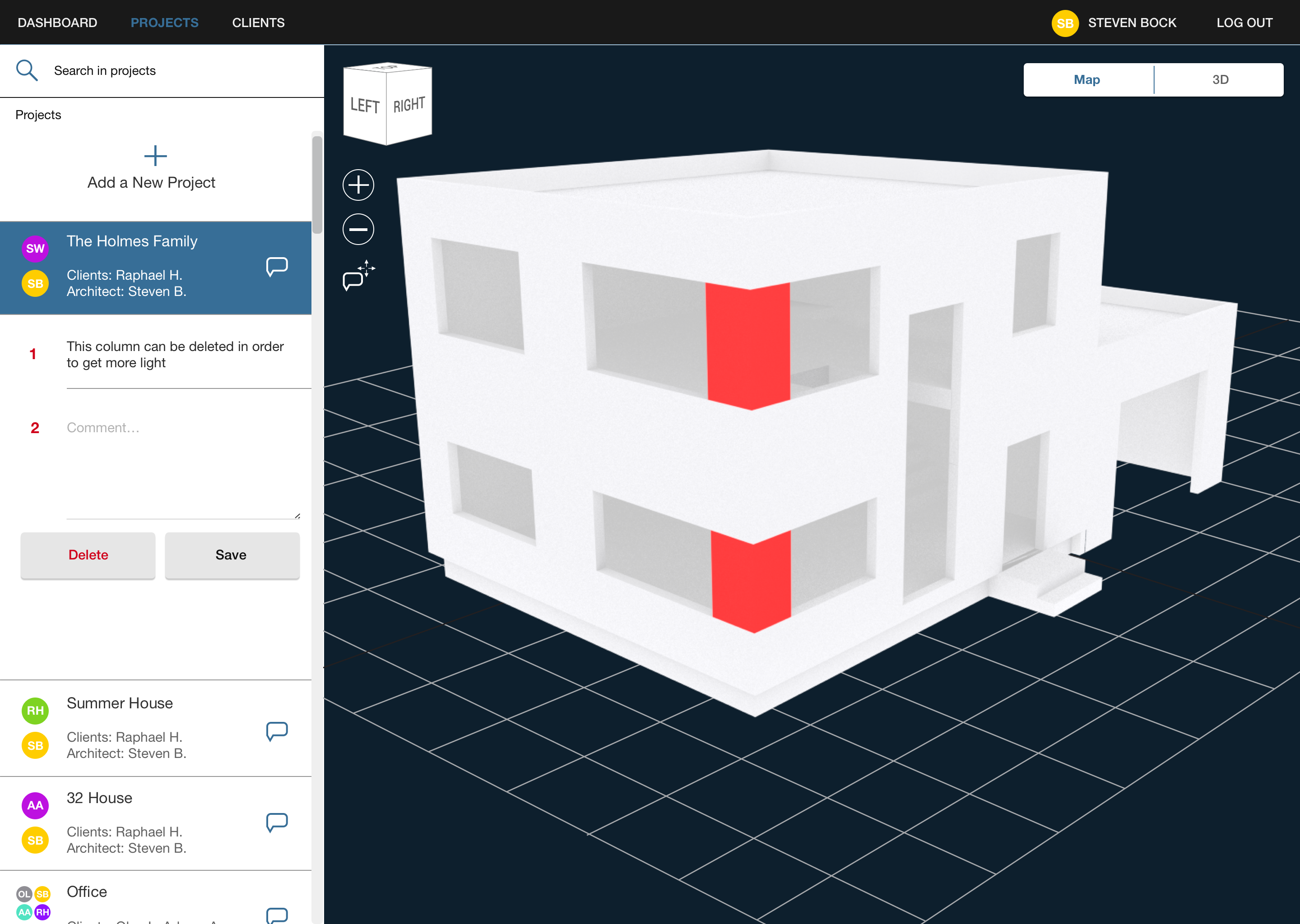

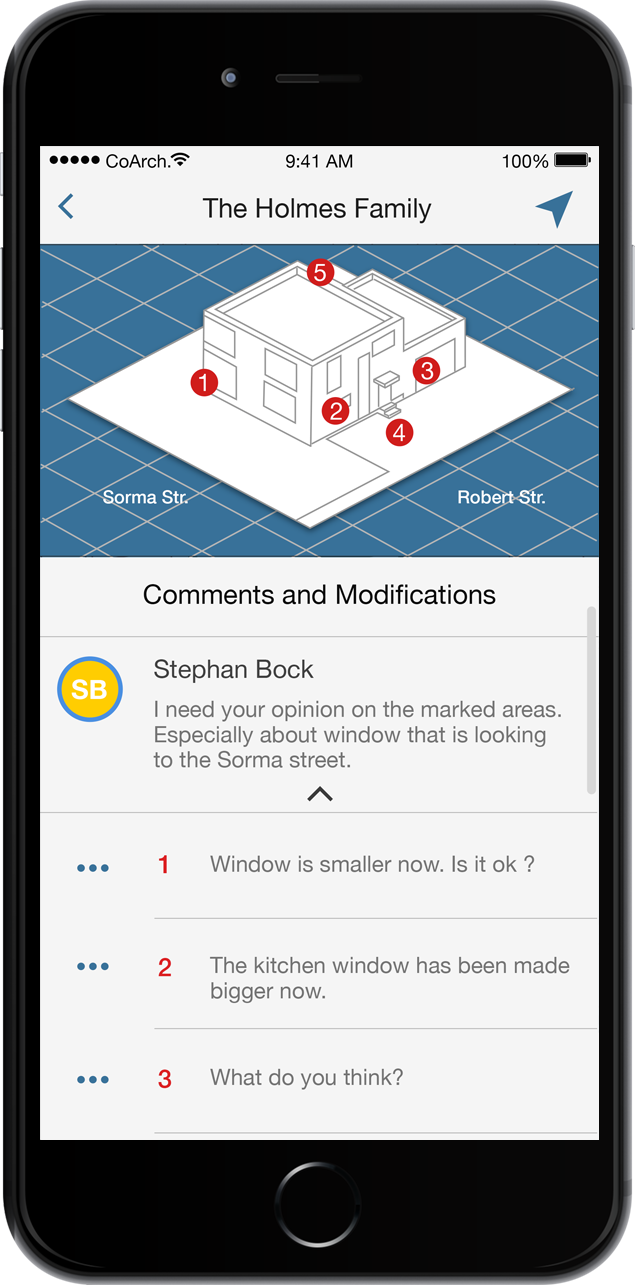

By clicking the dropdown icon of the feedback architect sees where in the project the client made changes one by one. At this point, architect toggles the modification that he wants to see on the 3D model of the project.

After toggling the modifications, architect can either download the data or confirm the changes the client made. By confirming them, the system send the client notification about the modifications that are approved. According to the that, the client can continue making other changes and this can create a loop till he reaches a compromise with the architect.

Second element in the navigation bar brings architect to the space where he can see previous uploaded projects or add a new one.

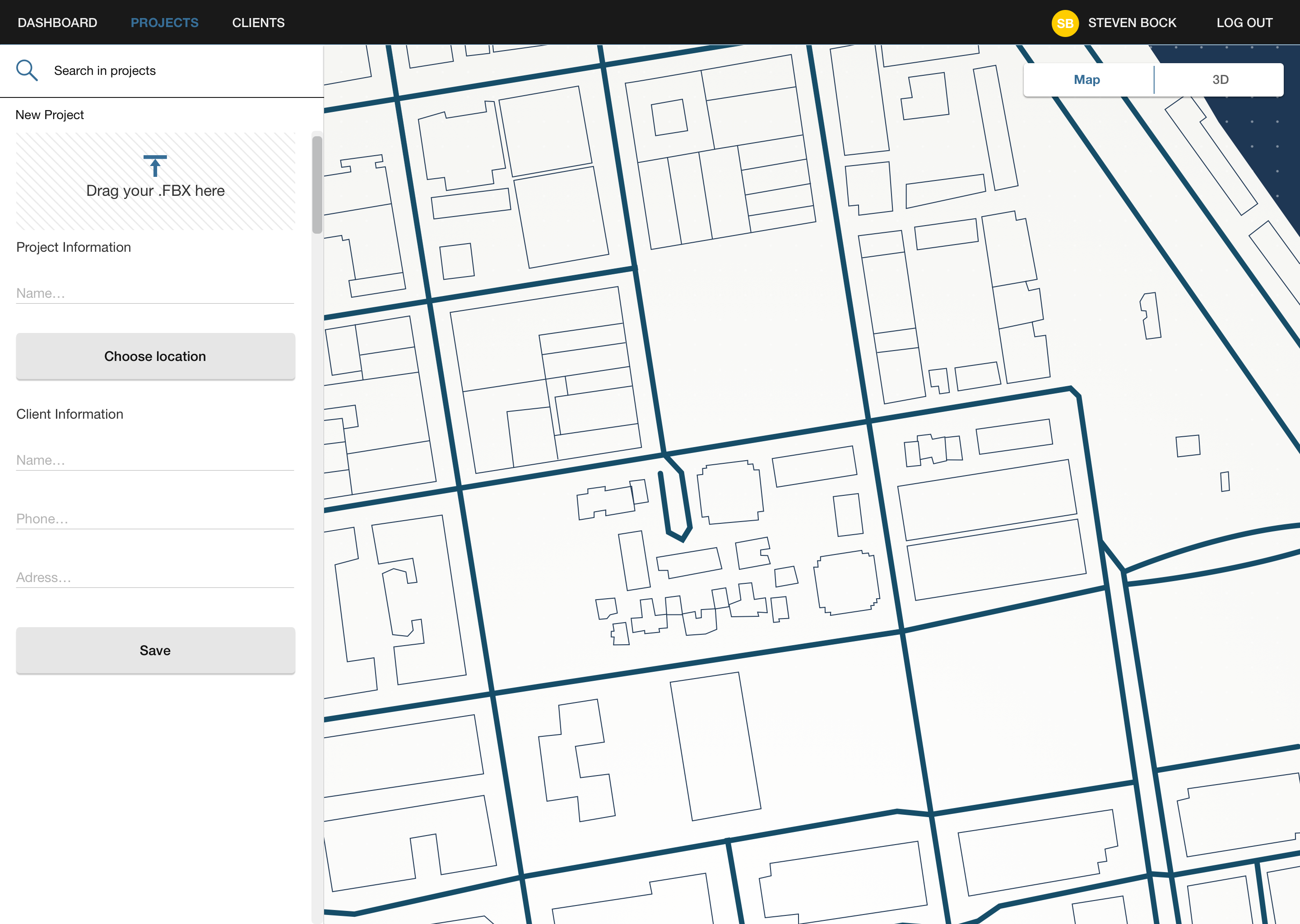

While adding a new project, architect uploads the 3d data of the project and enters further information. He selects a location by drawing an area on the world map.

After entering all the information, architect selects polygons or parts of the project where he needs contribution and feedback from his client. He drags the comment icon in the 3D view onto the parts of the model. After doing that, on the left area, a new row with comment field pops up, where he writes what client should read.

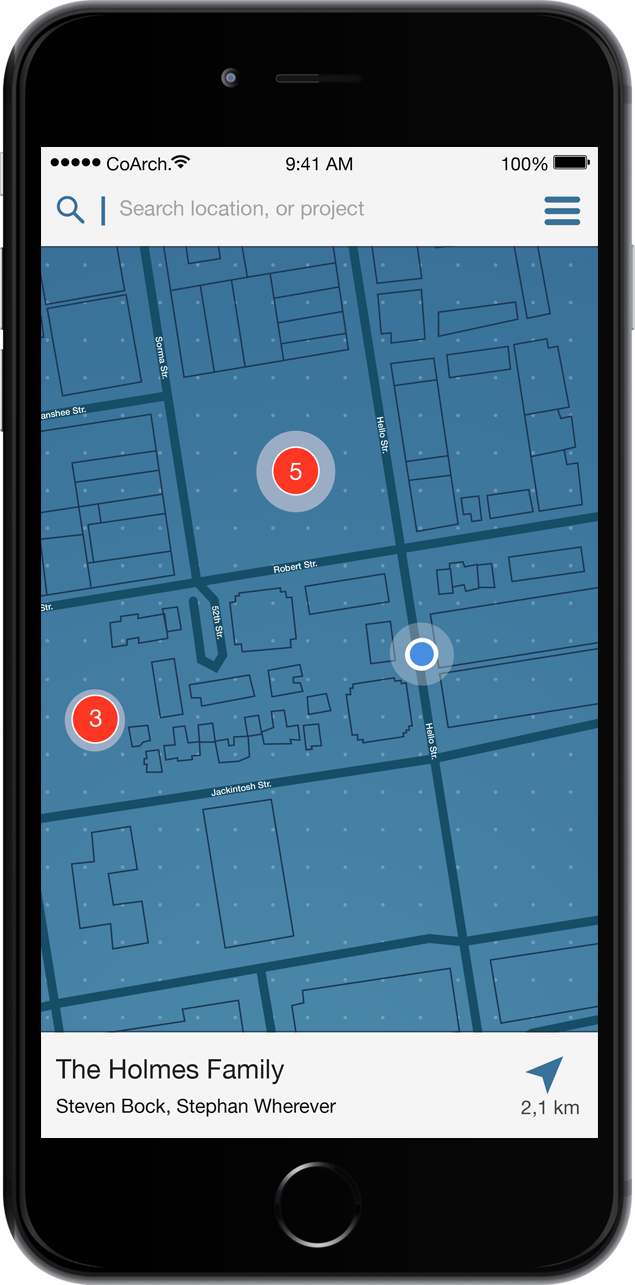

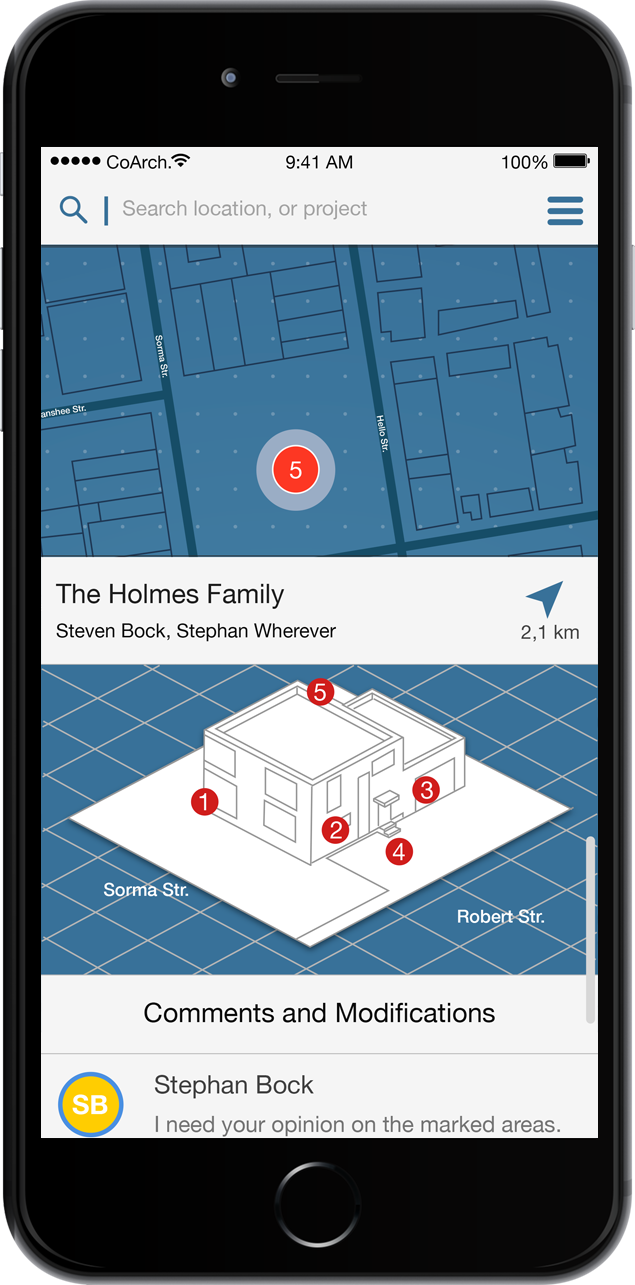

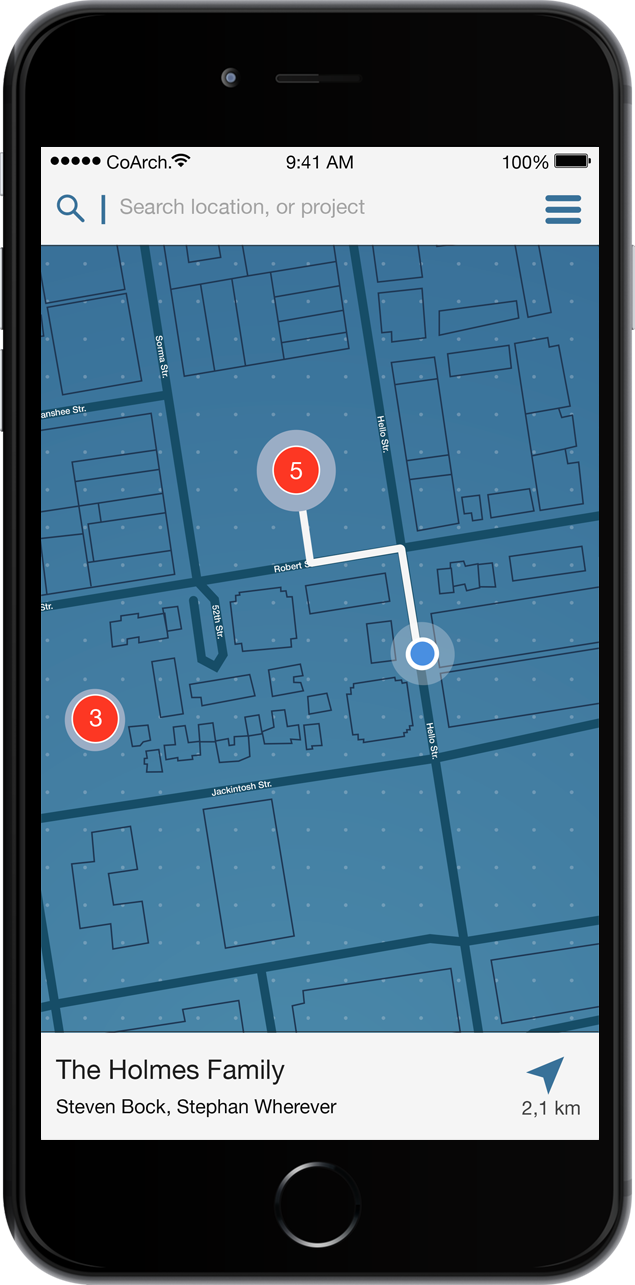

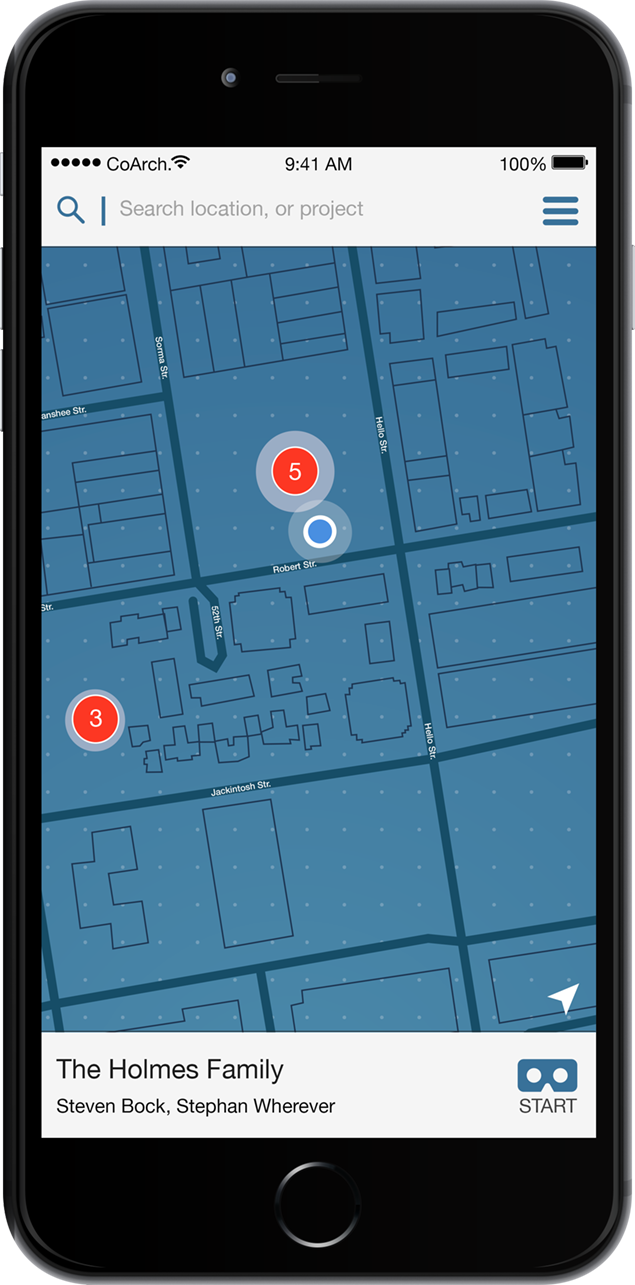

In smartphone application, the marks that are made by the architect will show on the map as seen in the red circle with numbers. The map here will only show the new ones. By selecting the project client will get more information where in the project the architect need his conribution. When he wants to give feedback, he will start the navigation and the app will bring him to the location.

After reaching to the location, client will switch augmented / mixed reality mode by pushing start icon. The user interface can be seen in the small prototype down below: (It may take a while to load )

During concept phase, i have built augmented / mixed reality prototypes and tested them as I was a client. My aim was to understand what kind of obstacles user would have and how they could have been solved. Moreover, I tried to analyze the technical obstacles, for example GPS was one of them. It generally updated in every two / three food steps, which was hindering a fluent good user experience.

In the video down below, you can see what kind of visual experience and embodied interaction this project aimed during augmented / mixed reality mode.